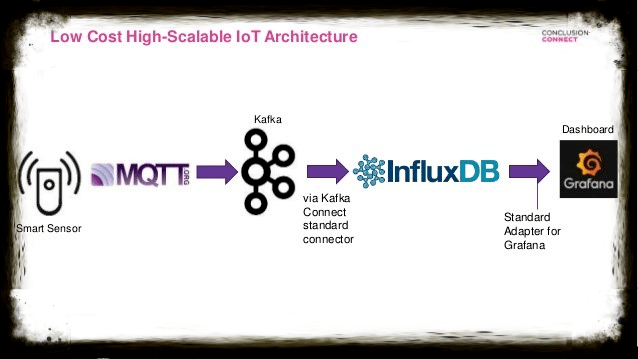

Kafka is a messaging system which was originally spun out of LinkedIn and is now kind of a slightly wider streaming platform. It is scalable architecture for the internet of things.

Streaming with Kafka and it’s streaming engine which is built-in and we’re going to talk about some kind of use cases which some of our customers use for IOT devices.

IOT CHALLENGES

➤ Unreliable communication channels (e.g. mobile)

➤ Constrained Devices

➤ Low Bandwidth and High Latency environments

➤ Bi-directional communication required

➤ Security

➤ Instantaneous data exchange

➤ scalability in iot

Rise of Apache Kafka

Various IoT Data Protocols

• MQTT (Message Queue Telemetry Transport)

• CoaP

• AMQP

• REST

• WebSockets • …

KAFKA IOT Hub

Apache Kafka may be a real-time streaming platform that’s gaining broad adoption within large and little organizations. Kafka’s distributed microservices architecture and publish/subscribe protocol make it ideal for moving real-time data between enterprise systems and applications. By some accounts, over one third of Fortune 500 companies are using Kafka. On Github, Kafka is one among the foremost popular Apache projects with over 11K stars and over 500 contributors. Without a doubt, Kafka is an open source project that’s changing how organizations move data inside their cloud and data center.

For IoT use cases where devices are connected to the info center or cloud over the general public Internet, the Kafka architecture isn’t suitable out-of-the-box. If you plan to stream data from thousands or maybe many devices using Kafka over the web , the Apache Kafka architecture isn’t suitable. There are variety of reasons Kafka by itself isn’t compatible for IoT use cases:

- Kafka brokers got to be addressed directly by the clients, which suggests each clients must be ready to hook up with the Kafka brokers directly. Professional IoT deployments usually leverage a load balancer as first line of defense in their cloud, so devices just use the IP address of the load balancer for connecting to the infrastructure and therefore the load balancer effectively acts as a proxy. If you would like your devices to attach to Kafka directly, your Kafka brokers must be exposed to the general public Internet.

- Kafka doesn’t support large amounts of topics. When connecting many IoT devices over the general public Internet, individual and unique topics (often with some unique IoT device identifier included within the topic name) are commonly used, so read and write operations are often restricted supported the permissions of individual clients. You don’t want your smart thermostat to urge hacked and people credentials potentially getting used to pay attention to all the info streams within the system.

- Kafka clients are reasonably complex and resource intensive compared to client libraries for IoT protocols. The Kafka APIs for many programming languages are pretty straightforward and straightforward , but there’s tons of complexity under the hood. The client will for instance use and maintain multiple TCP connections to the Kafka brokers. IoT deployments often have constrained devices that need minimal footprint and don’t require very high throughput on the device side. Kafka clients are by default optimized for throughput.

- Kafka clients require a stable TCP connection for best results. Many IoT use cases involve unreliable networks, for instance connected cars or smart agriculture, so a typical IoT device would wish to consistently reestablish a connection to Kafka.

- It’s unusual (and fairly often not even possible at all) to possess tens of thousands or maybe many clients connected to one Kafka cluster. In IoT use cases, there are typically large numbers of devices, which are simultaneously connected to the backend, and are constantly producing data.

- Kafka is missing some key IoT features. The Kafka protocol is missing features like keep alive and last will and testament. These features are important to create a resilient IoT solution which will affect devices experiencing unexpected connection loss and affected by unreliable networks.

KAFKA STRENGTHS

➤ Optimized to stream data between systems and applications in a scalable manner

➤ Scale-out with multiple topics and partitions and multiple nodes

➤ Perfect for system communication inside trusted network and limited producers and consumers

KAFKA ALONE IS NOT OPTIMAL

➤ For IoT use cases where devices are connected to the data center or cloud over the public Internet as first point of contact

➤ If you attempt to stream data from thousands or even millions of devices using Kafka over the Internet

KAFKA CHALLENGES

- Kafka brokers got to be addressed directly by the clients, which suggests each clients must be ready to hook up with the Kafka brokers directly. Professional IoT deployments usually leverage a load balancer as first line of defense in their cloud, so devices just use the IP address of the load balancer for connecting to the infrastructure and therefore the load balancer effectively acts as a proxy. If you would like your devices to attach to Kafka directly, your Kafka brokers must be exposed to the general public Internet.

- Kafka doesn’t support large amounts of topics. When connecting many IoT devices over the general public Internet, individual and unique topics (often with some unique IoT device identifier included within the topic name) are commonly used, so read and write operations are often restricted supported the permissions of individual clients. You don’t want your smart thermostat to urge hacked and people credentials potentially getting used to pay attention to all the info streams within the system.

- Kafka clients are reasonably complex and resource intensive compared to client libraries for IoT protocols. The Kafka APIs for many programming languages are pretty straightforward and straightforward , but there’s tons of complexity under the hood. The client will for instance use and maintain multiple TCP connections to the Kafka brokers. IoT deployments often have constrained devices that need minimal footprint and don’t require very high throughput on the device side. Kafka clients are by default optimized for throughput.

- Kafka clients require a stable TCP connection for best results. Many IoT use cases involve unreliable networks, for instance connected cars or smart agriculture, so a typical IoT device would wish to consistently reestablish a connection to Kafka.

- It’s unusual (and fairly often not even possible at all) to possess tens of thousands or maybe many clients connected to one Kafka cluster. In IoT use cases, there are typically large numbers of devices, which are simultaneously connected to the backend, and are constantly producing data.

- Kafka is missing some key IoT features. The Kafka protocol is missing features like keep alive and last will and testament. These features are important to create a resilient IoT solution which will affect devices experiencing unexpected connection loss and affected by unreliable networks.

➤ Kafka Clients need to address Kafka brokers directly, which is not possible with load balancers IOT REALITY

➤ Clients are connected over the Internet

➤ Load Balancers are used as first line of defense

➤ IP addresses of infrastructure (e.g. Kafka nodes) not exposed to the public Internet

➤ Load Balancers effectively act as proxy

KAFKA CHALLENGES

➤ Kafka is hard to scale to multiple hundreds of thousands or millions of topics

IOT REALITY

➤ IoT devices typically are segmented to use individual topics

➤ Individual topics very often contain data like unique device identifier

➤ Multiple millions of topics can be used in a single IoT scenario

➤ Ideal for security as it’s possible to restrict devices to only produce and consume for specific topics

➤ Topics are usually dynamic

KAFKA CHALLENGES

➤ Kafka Clients are reasonable complex by design (e.g. use multiple TCP connections)

➤ Libraries optimized for throughput

➤ APIs for Kafka libraries are simple to use but the behavior sometimes isn’t configurable easily (e.g. async send() method can block)

IOT REALITY

➤ IoT devices are typically very constrained (computing power and memory)

➤ Device programmer need very simple APIs AND full flexibility when it comes to library behavior

➤ Single IoT devices typically don’t require lot of throughput

➤ Important to limit and understand the number of TCP connections, especially over the Internet. Very often only one TCP connection to the backend desired

KAFKA CHALLENGES

➤ No on/off notification mechanism

➤ No Keep-Alive mechanism individual TCP connections for producers

➤ Kafka Protocol for producers rather heavyweight over the Internet (lots of communication)

IOT REALITY

➤ Features like on/off notifications are often required

➤ Unreliable networks require lightweight keep-alive mechanisms for producers and consumers (half-open connections)

➤ Device communication over the Internet requires minimal communication overhead

Kafka still brings tons useful to IoT use cases. IoT solutions create large amounts of real-time data that’s compatible for being processed by Kafka. The challenge is: How does one bridge the IoT data from the devices to the Kafka cluster?

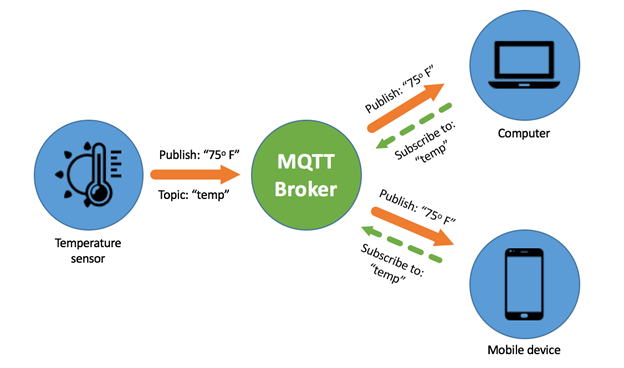

Many companies that implement IoT use cases are watching possibilities of integrating MQTT and Kafka to process their IoT data. MQTT is another publish/subscribe protocol that has become the quality for connecting IoT device data. The MQTT standard is meant for connecting large numbers of IoT devices over unreliable networks, addressing many of the restrictions of Kafka. especially , MQTT may be a lightweight protocol requiring alittle client footprint on each device. it’s built to securely support many connections over unreliable networks and works seamlessly in high latency and low throughput environments. It includes IoT features like keep alive, last will and testament functionality, different quality of service levels for reliable messaging, also as client-side load balancing (Shared Subscriptions) amongst other features designed for public Internet communication. Topics are dynamic, which suggests an arbitrary number of MQTT topics can exist within the system, fairly often up to tens of many topics per deployment in MQTT server clusters.

While Kafka and MQTT have different design goals, both work extremely well together. The question isn’t Kafka vs MQTT, but the way to integrate both worlds together for an IoT end-to-end data pipeline. so as to integrate MQTT messages into a Kafka cluster, you would like some sort of bridge that forwards MQTT messages into Kafka. There are four different architecture approaches for implementing this sort of bridge:

WHAT IS MQTT?

➤ Most popular IoT Messaging Protocol

➤ Minimal Overhead

➤ Publish / Subscribe

➤ Easy

➤ Binary

➤ Data Agnostic

➤ Designed for reliable communication over unreliable channels

➤ ISO Standard

USE CASES

➤ Push Communication

➤ Unreliable communication channels (e.g. mobile)

➤ Constrained Devices

➤ Low Bandwidth and High Latency environments

➤ Communication from the backend to IoT device

➤ Lightweight backend communication

Publish/Subscribe

HOW TO USE KAFKA FOR IOT?

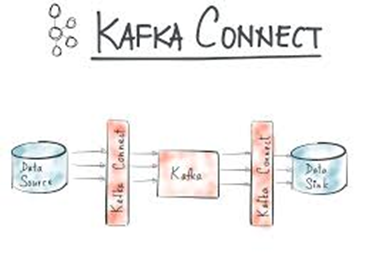

Kafka Connect

Kafka has an extension framework, called Kafka Connect, that permits Kafka to ingest data from other systems. Kafka Connect for MQTT acts as an MQTT client that subscribes to all or any the messages from an MQTT broker.

If you don’t have control of the MQTT broker, Kafka Connect for MQTT may be a worthwhile approach to pursue. This approach allows Kafka to ingest the stream of MQTT messages.

There are performance and scalability limitations with using Kafka Connect for MQTT. As mentioned, Kafka Connect for MQTT is an MQTT client that subscribes to potentially ALL the MQTT messages passing through a broker. MQTT client libraries aren’t intended to process extremely large amounts of MQTT messages so IoT systems using this approach will have performance and scalability issues.

This approach centralizes business and message transformation logic and creates tight coupling, which should be avoided in distributed (microservice) architectures. The industry leading consulting firm Thoughtworks called this an anti-pattern and even put Kafka into the “Hold” category in their previous Technology Radar publications.

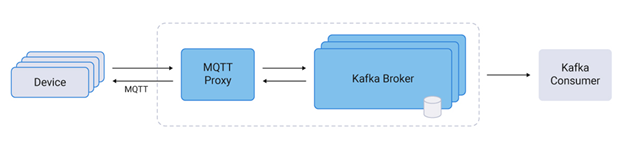

MQTT proxy

Another approach is that the use of a proxy application that accepts the MQTT messages from IoT devices but doesn’t implement the publish/subscribe or any MQTT session features and thus isn’t a MQTT broker. The IoT devices hook up with the MQTT proxy, which then pushes the MQTT messages into the Kafka broker.

The MQTT proxy approach allows for the MQTT message processing to be done within your Kafka deployment, so management and operations are often done from one console. An MQTT proxy is typically stateless, so it could (in theory) scale independent of the Kafka cluster by adding multiple instances of the proxy.

The limitations to the MQTT Proxy is that it’s not a real MQTT implementation. An MQTT proxy isn’t supported pub/sub. Instead it creates a tightly coupled stream between a tool and Kafka. The advantage of MQTT pub/sub is that it creates a loosely coupled system of end points (devices or backend applications) which will communicate and move data between each end point. as an example , MQTT allows communication between two devices, like two connected cars can communicate with one another , but an MQTT proxy application would only allow data transmission from a car to a Kafka cluster, not with another car.

Some Kafka MQTT Proxy applications support features like QoS levels. It’s noteworthy that the resumption of a QoS message flow after a connection loss is merely possible, if the MQTT client reconnects to an equivalent MQTT Proxy instance, which isn’t possible, if load balancer is employed that uses least-connection or round-robin strategies for scalability. therefore the main reason for using QoS levels in MQTT, which is not any message loss, only works for stable connections, which is an unrealistic assumption in most IoT scenarios.

The main risks for using such an approach is that the incontrovertible fact that a proxy isn’t a totally featured MQTT broker, so it’s not an MQTT implementation as defined by the MQTT specification, only implementing a small subset, so it’s not a uniform solution. so as to use MQTT with MQTT clients properly, a totally featured MQTT broker is required.

If message loss isn’t a crucial factor and if MQTT features designed for reliable IoT communication aren’t used, the proxy approach could be a light-weight alternative, if you simply want to send data unidirectionally to Kafka over the web .

—